1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

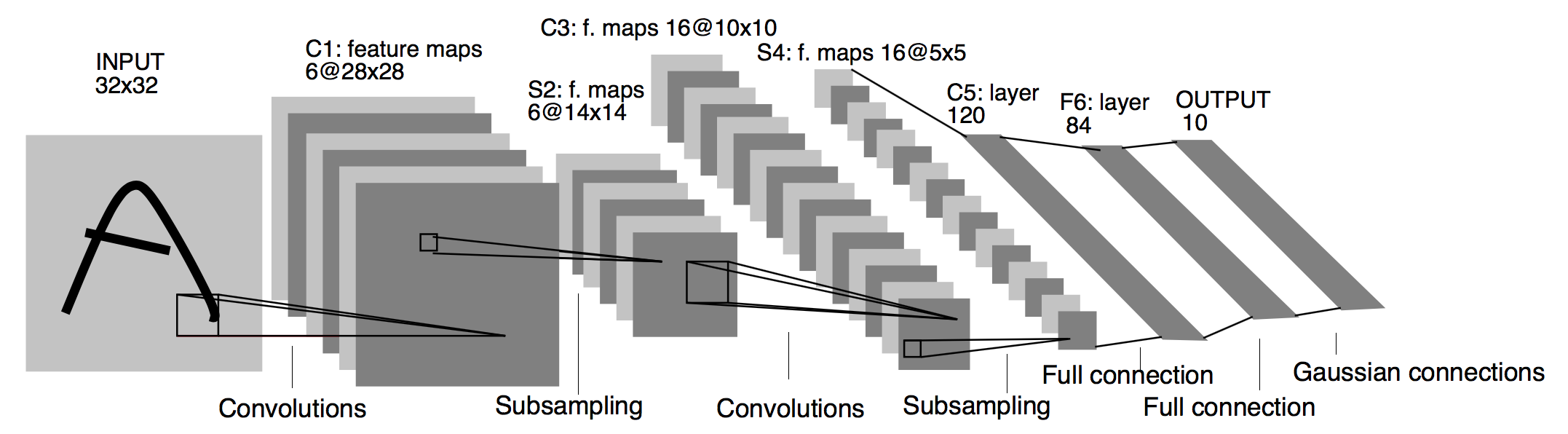

Model: "Basic-CNN"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

conv2d_25 (Conv2D) (None, 28, 28, 32) 320

_________________________________________________________________

activation_8 (Activation) (None, 28, 28, 32) 0

_________________________________________________________________

conv2d_26 (Conv2D) (None, 28, 28, 32) 9248

_________________________________________________________________

activation_9 (Activation) (None, 28, 28, 32) 0

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 14, 14, 32) 0

_________________________________________________________________

dropout_5 (Dropout) (None, 14, 14, 32) 0

_________________________________________________________________

conv2d_27 (Conv2D) (None, 14, 14, 64) 18496

_________________________________________________________________

activation_10 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

conv2d_28 (Conv2D) (None, 14, 14, 64) 36928

_________________________________________________________________

activation_11 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

max_pooling2d_9 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_6 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_4 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_3 (Dense) (None, 512) 1606144

_________________________________________________________________

activation_12 (Activation) (None, 512) 0

_________________________________________________________________

dropout_7 (Dropout) (None, 512) 0

_________________________________________________________________

dense_4 (Dense) (None, 10) 5130

_________________________________________________________________

activation_13 (Activation) (None, 10) 0

=================================================================

Total params: 1,676,266

Trainable params: 1,676,266

Non-trainable params: 0

_________________________________________________________________

|